Observability With Christine Yen

Posted on Monday, Dec 9, 2019

Transcript

Matt Stratton: Welcome to Page It To The Limit. A podcast where we explore what it takes to run software and production successfully. We cover leading practices in the software industry to approve both system reliability and the lives of the people supporting those systems. I’m your host Matt Stratton @MattStratton on Twitter. Today’s episode was recorded during PagerDuty Summit in 2019. I spoke with Christine Yen the CEO and cofounder of Honeycomb. If you’ve ever wondered, what’s up with this observability thing I keep hearing about. This episode is exactly for you. I am here at PagerDuty Summit with cofounder and CEO of Honeycomb Christine Yen. Christine, welcome to the show.

Christine Yen: Thanks. Thanks for having me.

Matt Stratton: Christine gave a great talk today thinking about the needs for developers being engineers being able to understand their applications, understand their systems. Before we get into some of the technical and practitioner stuff. Christine, you want to tell us a little bit, maybe a little teaser about your talk. What were some of the topics.

Christine Yen: I love this talk because when folks hear observability or monitoring, they think this is for ops people. It’s for SRE’s, this is for the folks who worry about what happens after it could get shipped. I look over, and I know that to not be the case. Observability is as important if not more important for the folks who are writing the code and shipping it. I love getting to speak to rooms of folks and tell them stories, and show them how they can get there as well.

Matt Stratton: Right. Let’s take it back a second. We hear a lot about observability. At least I hope we do. If you aren’t hearing a lot about observability, you’re following all the wrong people on Twitter. We can give you some suggestions. Let’s take a step back because I’ve heard a lot of different definitions. Like a lot of words in our industry, the words mean what whoever’s selling you something unless it’s me. Let’s go back in your estimation, in your approach. What is observability all about?

Christine Yen: I am someone who likes to go back to basics as well. If we look at the word observability honestly, it has to be about something that you can do. Something that you can do to practice. It’s got to be verb based. I really think of it as the ability to ask questions of your system, and ideally without having to redeploy or code each time. The word I think is really something that Charity found in [inaudible 00:02:48] because it allowed us to really reflect the fact that the world and the assumptions of what we are trying to do are different than the monitoring world. Monitoring is still an important part of keeping your impression stable, but relies on being able to predict what you care about. The way that the rest of the world is changing, the way that the rest of our technology is changing. That ability to predict is defusing. We need to be able to ask questions. We need to be able to flex with our changing technology and observe the unknown unknown conditions. Right. If monitoring is for the known unknowns. We know that CPU should not… Sorry, I know that disk space should not exceed 90% utilized. Observe all of these for the unknown unknowns. I’m like I don’t know how something is going to go wrong tomorrow. But I better be able to tell when something has deviated from when customers aren’t able to do the [inaudible 00:03:51] that they expect to be able to do.

Matt Stratton: Every time I hear, and when we get into conversations about observability, it reminds me of a repeated conversation I had with a CTO of mine 10 years ago. Where we would have an incident of some kind, a system would go down. Invariably afterwards, my CTO would say to me, why weren’t you monitoring for this? I would say because until last night I didn’t know that was a thing that could happen. It’s interesting, then when a lot of this started to come out, it was like, that’s that conversation. It’s again it’s the questions we know to ask. Right? I feel like observability again like you said, it’s the unknown unknowns. It’s questions. Because what we can’t do during an incident is invent a time machine and go back and throw some monitors that we didn’t have before. Right?

Christine Yen: Right. Well, and the thing that happens. I’m sure this happened at the end of this conversation is this, I guess I will add some more dashboards or alerts to catch it next time. It never happened again. You end up with pages and pages of stale dashboards. Artifacts to past failures that are just never going to matter. That sort of the next attributes to feeling overwhelmed, having too much information, and no answers. All of that is a sign of a broken initial approach, which is we can predict what will go wrong.

Matt Stratton: It also goes back to this missing concept of not having the understanding that all incidents are unique. Right. Yes, every snowflake has six sides. There’s commonality. Every incident there’s something different about it.

Christine Yen: Hopefully.

Matt Stratton: Right. Yeah. Well, I mean… The idea of building safe guards based upon what we learned from an incident specifically because this action happened. We will now put in a monitor for the next time. That’s assuming that there will be incidents that have that same shape many times. It eliminates this understanding that edges are rough around that. Right? As we start to… Our systems are so complex, right. There was a time when we were running a lamp stack when you could sit there and you could say, yep, there’s about five things that can go wrong because there’s five connections. Actually, that’s probably not even super true. It’s exponentially more complex today.

Christine Yen: In many ways, it’s like taking the things that we did in the monitoring world and not throwing them out the window but looking at them through a different lens. When an incident occurs. Let’s say you have some very busy API. You expect the latency at your API to below some certain threshold. When you have that initial signal of latency exceeded the threshold. Those first few steps are often the same. The first few things you look at. The first few culprits. It starts to feel like, well, this is a monitoring shaped problem. Maybe it’s the same thing again. Those deviations that’s where it’s interesting. Yet, in this observability world, Honeycomb thesis is not well you should preserve these intermediate steps also. Let’s capture a paper trail of what we did. Let’s see what we can learn from that each time. Let’s just have a tool that’s flexible and fast enough that we can run through these intermediate steps to confirm and learn, make sure we’re on the right track. There’s so much… I’ve had a lot of good conversations with folks here today about burnout. People who are feeling stressed out, and single points of failure. How do we invest in this thing when everyone’s so overwhelmed? You step back, and you’re like, how do you learn and share responsibility without technology? Right? You ask someone. How did you look at it that time? How did you figure this out? The more that we can recognize that’s how people want to learn. The more… and build into our tools. The idea that people want to investigate. People want to explore. Then, there’s learning to be done by watching people explore. The more that the tools the better the experience will ultimately be.

Matt Stratton: Can we maybe… We don’t have to get super specific product-y. If it helps for the illustration, that’s okay. No one will get upset. Because we’re going to probably talk about Page Duty at some point out of this podcast. Because Page Duty pays the bills. Anyway, I want to think from an incident perspective. If we look through… We talked about that paper trail. Hopefully we got our alerts in our PagerDuty that’s waking us up. It’s waking us up based on some business metric according to an SLO of some kind, service level objective. It’s not waking me up because 90% or sequel servers using all the memory like it’s supposed to anyway. This is coming in. I’ve got an inside. We started an incidence because an SLO was exceeded. Now how do I start using observability practices to go through this restoration of service?

Christine Yen: I love that we’re starting with SLO’s, this is metrics. Absolutely that’s the right thing to get out of bed for. The thing that we might think of is when you’re investigating something you’re entering a giant space of possibilities. A good tool for an investigation will help you start to hone in on the signal. The possible source of the issue, or the anomaly, whatever. Ignore all the [inaudible 00:09:13]. Old school world, you had a bunch of dashboards, you use your eyeballs to pick out the tiny squares that had the inter six signals in them. With an observability tool, like Honeycomb, there are many possible ways to do. Let’s break down by customer. Pair, what’s having those break down by end point. Often the person on call based on what’s going on will have an idea of the source of ways that they want to start slicing this problem domain, this search space. With Honeycomb specifically, sometimes even search space source, if someone’s new to being on call. They don’t know which signals are important. We don’t know that a single customer might be correlated to a particular end point. We have something we call Bubble Up. It lets you actually say hey, here’s this anomalous area on [inaudible 00:10:06]. Tell me what’s special inside this left section relative to everything else.

Matt Stratton: This is something that the tool is saying. Honeycomb can identify this looks a little squirrely. It helps get your attention maybe.

Christine Yen: Exactly. Ultimately, we are nonetheless trying to give you answers. You vet your systems. You know your systems best. What we can say is hey, there is some statistical interesting-ness over here where most of the points in this area that you said are weird seem to be correlated with this user.

Matt Stratton: Might be nothing.

Christine Yen: Yeah. It’s all about that reducing the search space so that you can start to be like, okay, is something where I can stop the bleeding quickly, or is this a wide spread systemic problem. That’s a difference between okay, crap, it’s that one customer again. I’m going to turn them off. Go back to bed.

Matt Stratton: Right.

Christine Yen: Gosh. Everything’s [inaudible 00:11:05].

Matt Stratton: Correct me if I’m wrong. I could see how Bubble Up features also helpful to keep us from going down the same, always looking for the same root cause. We can have a hold up. We’re going to have a future episode of Page It To The Limit when we talk about the word, root cause. For now, that’s a thing. Right?

Christine Yen: Yeah.

Matt Stratton: Where it can be like, this happened. It’s usually this and I’m going to go look in this place I always look. When I don’t see the answer I get very confused. When it is something that’s not like the 99 other times we’ve had this. Like you said, the search space is really huge, the problem domain is wide helping us maybe… I hate to say, think outside the box. That’s kind of cheesy. Think outside of our normal kind of repeating process. There is different shapes and instances.

Christine Yen: Yeah. This is why I resist strongly the idea that that can be sprinkles dust on it to solve the problem. Because any of these instant response situations, it’s like a mystery. It’s investigation. It’s a diagnosis. There’s so much context that humans have in their brains to know whether it’s [inaudible 00:12:20] doesn’t. It’s that cluster again. There’s so much institutional knowledge that just spread even across grades. For example, it’s been awhile since I’ve been on call. A couple years ago there was an outage. It was 5:00 AM. It’s earlier enough that I felt bad wake anyone else up. I was trying to debug, and it was the [inaudible 00:12:46] customers. I knew that [inaudible 00:12:49]. The ability to start. Okay, well paper trail. What was the paper trail that you left. What were things that he looked at. What cues could I pick up on of what would be interesting. Even if I don’t personally know where to look.

Matt Stratton: Yeah. I think that’s the thing. We do the power of our human brain as well as that ability to make intuitive leaps. Sometimes it’s five in the morning or three in the morning, we want to find the… We don’t want to make the intuitive leap. We want the answer that makes sense. It’s the same thing it’s been the last few times. Having something that helps us think doesn’t make the answer. The AI answer would be… It bubbles this up, and Bubble Up goes, it must be this. I’ll do a thing. Really I like this thought that it’s sort of a nudge. Think about this. Probably nothing. Might do that. Sometimes we need helpers like that. Just a thing. Is there also some help and value in terms of when I’m a responder and I don’t have deep domain knowledge in this particular problem ever happening before? Because again, it’s about the questions. It seems to me this is a tool, not even a tool but a practice. A space that helps us think of questions to ask we may not have thought to ask. If we could ask a question, we can answer it. To me, the biggest challenge is figuring out what questions to ask in the first place. Does this help us ask better questions maybe?

Christine Yen: Sometimes. Honestly there’s a difference, right? Certainly you would also not want to be sitting around thinking of spending time thinking about the best solution.

Matt Stratton: Yeah.

Christine Yen: Right question is one approach. Asking good enough questions is another. This is where speed and iteration is so important. Again, as we’re exploring this search space of where is the root cause. Where should I be looking?

Matt Stratton: What are some of the contributing factors.

Christine Yen: Yeah. The ability to be like, well maybe it’s under this rock. Nope. Maybe it’s under that rock. The faster you can get through those hypotheses and rule them, the faster you can get towards that answer. We spend a lot of time thinking about how can we make this easier for someone new to be on call? How can we make something easier for someone new to a team? How do we help folks share that context? It’s funny that you use the word, the phrase loops of intuition. Remembering a talk at [inaudible 00:15:32] a couple years ago where someone asked… I don’t remember the company. Someone was onstage talking about how she would watch senior engineers be on call and it would just like they were making these magical leaps of intuition. Ultimately, an approach can be, slow down, what are you doing? I can help you through it. Another could be see where they go. See where they back up. By understanding the path they take by being able to examine that paper trail gave less results. You can learn to learn them as well. Again, it’s not so much of this industry you build up experience by just trial and error.

Matt Stratton: Yeah.

Christine Yen: Experiment and you try and you see how it turns out. That’s why the speed of the duration being other people’s paths are… those are some of the best they’re replacing.

Matt Stratton: One bit of controversial statement that we’ve made in our instant command training. If you can’t decide between two equally bad actions and reserve a service from the coin. Because making the wrong decision is better than making no decision. Because you make the wrong decision you get information. What I’m thinking about is maybe this having observability techniques and practices available to it reduces the cost of questions. Because answering a question can be very expensive. I mean that just in terms of time and effort and everything. If it’s going to take a lot of work to answer a question, you’re going to spend a lot of… I was thinking when you were talking about it. Is it about asking the right question or asking a lot of questions. If questions are expensive to answer. You’re going to want to make sure that the question that you pick is the right one. You’re going to spend a lot of time on that. If you’re like, it’s cheap to answer questions. Okay, I’ll ask this one real quick.

Christine Yen: Honestly I think what happens more often than people spending a lot of time thinking about the right question to ask. Is them being like, screw it. I’m not going to ask any questions. I’m just going to make guesses. I’m going to throw some levers and maybe it will just fix the problem. That is an approach, certainly.

Matt Stratton: That’s been half my career.

Christine Yen: Yeah. One of the things actually our present directly described as that by being able to use Honeycomb in their response they’re able to guess less.

Matt Stratton: Okay.

Christine Yen: Make sure that they were doing a thing that actually would impact the problem.

Matt Stratton: I’m sold. This is the way that the future. I will make it all amazing. How do we get started? Everything else, I understand transformation. If you’re listening hear this and repeat it. Hit back on this podcast a bunch of times and listen to this sentence over and over again. Start small, iterative change, do not stab your fingers and be the dev ops. Okay, so if we understand that. We’re going to start small. We’re going to do things like that. How do we get ourselves into a place to be able to start benefiting from these practices and these approaches?

Christine Yen: I’m so glad you added that sentence, disclaimer. On one hand, my very official Honeycomb hat would be like, we have all these libraries that make it very easy to… Certainly, I’ll come back to that.

Matt Stratton: That’s not an understatement.

Christine Yen: Not an understatement. That can be iterative. Okay, I’ll come back to that. So many people think of observability instrumentation as this thing they have to get right, and it has to be this big initiative, and you have to get all the requirements, and it has to work across all of your services. I’m like, again, if you have sufficiently low pain that you have the luxury of time to do that. Awesome, good. Do what works for your organization. For the rest of us, things are on fire all the time. I like to talk about incremental steps from just unstructured text logs. Everyone has unstructured text logs somewhere. Maybe they’re garbage, maybe they’re not. There’s some rhetoric floating out there that’s like, logs are garbage. Logs aren’t garbage if that’s all you have. The fact that logs are so free form, means that you can start to make incremental changes there towards a future that lends itself to faster tools and this workflow that we’re describing. You start with unstructured logs. You start adding some business entities that you care about. Things that will help you debug and reduce that search space. This is level identifiers. For us it’s always customer id, data set id, sometimes [inaudible 00:19:50] repetition id. Things that we know tend to be important or useful in reducing that search space in a meaningful way. We start to add those identifiers. Ideally in some semi structured format, key value pairs. Then you’re like, okay. I’ve got some business identifiers in here. I can start to understand the context of why error 503, why that line matters, who it’s impacting. Going from there to a full structured box. Ultimately we’re not in a world anymore where human readability is as important. Use adjacent logs, use structure, use [inaudible 00:20:24], use whatever structure you want. Have it be a format that can be quickly and efficiently adjusted, and retrieved by machines. Unstructured text logs are not. No one wants to be running [inaudible 00:20:38] during incidents. Let’s just put that out. Once you have the structure you can start to think about this data in terms of analysis rather than inquiry. So many people think about logs as [inaudible 00:20:47]. Well, I don’t know how to find the problem unless I know what to look for. Well, okay, what if you can start… If you take a step back you probably describe signals that your system is behaving correctly. Handling some requests. Latencies under some small threshold. Those are values that you can start to calculate based on processing lots of these individual structured logs. Great. You’re starting to think analytically. Okay, request line where question drops. That’s just counting the number of logs. You can start to build in this muscle of logs are not things that you come through. They are things that you aggregate and put signals in. From there you can go tracing structure. You can reduce the redundancy of your logs, useful events. Instead of admitting 17 log lines per function, you get one per there’s lots of directions you can go. But taking steps along this path towards better observability of your systems doesn’t have to be a promise to return to the library as I mentioned. Our libraries, we call them bee lines. There are things that you can drop into your application and can get you quickly to a baseline capturing common meta data, common frameworks. Again, similarly, that’s not a solution to it’s on. We can only capture common meta data. We can’t capture the stuff that matters to you. There also it should be an iterative process. You should be like, well, I want to add customer id and customer partition because they matter to me today. Maybe next week I’ll have something new. I’ll add… Maybe we’ll choose the concept of [inaudible 00:22:25] id. We throw that in. Instrumentation by nature, the same way that tests the documentation should evolve the code. Instrumentation should evolve because you’re going to want to ask different questions as your code goes up.

Matt Stratton: As we’re kind of coming back to this. People want to learn more the likes of this podcast. Maybe people who are listening right now. I hope you like to listen to podcasts or else you just wasted the last 25 minutes, or reading. Where are some great resources for people to want to build up their observability knowledge muscle.

Christine Yen: We have a resources section on our website honeycomb.io that contains media of whatever you like to consume. I really recommend our white paper section. In particular if you liked my talk today, or my description of the talk, it treats you. We have an observability for developers white paper that talks through some of these ideas and how it benefit the development process. We also have webinars where we talk through various pieces of what do your triggers look like. What does instrumentation look like. If you’re a person who’s like, I don’t want to have to learn, I just want to play and see how it feels. If you go to play.honeycomb.io we have a couple of nice little can scenarios. Where one of them in particular we took data from an outage that we had in May of 2018. We like froze it in time. Loaded it into this demo data set, and then basically encoded in a little story. There’s a little widget that’ll pop up and it’ll walk you through using Honeycomb to try and address, solve the outage, or investigate the incident.

Matt Stratton: That’s super fun. One final thing. If there’s one thing you could tell our audience about observability you want them to remember. What would that be?

Christine Yen: It’s not just for ops people.

Matt Stratton: It’s not just for ops people. Christine, thank you for being my guest here in Page It To The Limit. I really appreciate it. I learned a lot. I’ve been reading a lot about observability, but it’s great to be able to sit down and ask direct questions.

Christine Yen: Thank you for having me. This is fun.

Matt Stratton: I’m Matt Stratton wishing you an uneventful day. That does it for another installment of Page It To The Limit. We’d like to thank our sponsor PagerDuty for making this podcast possible. Remember to subscribe to this podcast if you like what you’ve heard. You can find our show notes at pageittothelimit.com. You can reach us on Twitter at pageit2thelimit using the number 2. That’s pageit2thelimit. Let us know what you think of the show. Thank you so much for joining us and remember uneventful days are beautiful days.

Guests

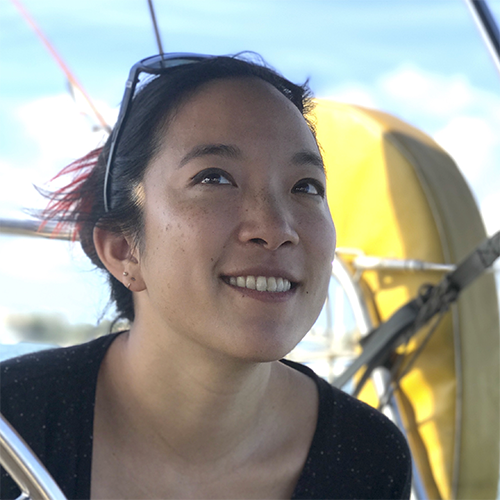

Christine Yen

Christine delights in being a developer in a room full of ops folks. As a cofounder of Honeycomb.io, a startup with a new approach to understanding production systems, she cares deeply about bridging the gap between devs and ops with technological and cultural improvements.

Hosts

Matt Stratton (He/Him)

Matt Stratton is a DevOps Advocate at PagerDuty, where he helps dev and ops teams advance the practice of their craft and become more operationally mature. He collaborates with PagerDuty customers and industry thought leaders in the broader DevOps community, and back in the day, his license plate actually said “DevOps”.

Matt has over 20 years experience in IT operations, ranging from large financial institutions such as JPMorganChase and internet firms, including Apartments.com. He is a sought-after speaker internationally, presenting at Agile, DevOps, and ITSM focused events, including ChefConf, DevOpsDays, Interop, PINK, and others worldwide. Matty is the founder and co-host of the popular Arrested DevOps podcast, as well as a global organizer of the DevOpsDays set of conferences.

He lives in Chicago and has three awesome kids, whom he loves just a little bit more than he loves Doctor Who. He is currently on a mission to discover the best phở in the world.